Integrate Custom LLM App into Slack using PlugBear

Learn how to integrate your own LLM apps into Slack using PlugBear Python SDK.

Previously, integrating OpenAI Assistants with PlugBear didn't require coding, but flexibility was limited. Now, PlugBear supports custom LLM apps with the launch of the PlugBear Python SDK. This means you can use any AI model or API - OpenAI, Anthropic, Gemini, and more. Let's dive into how to integrate a custom LLM app with PlugBear.

Step 1: Integrate PlugBear SDK into Your LLM App

Install PlugBear SDK

If you're using FastAPI, use the command below to install the SDK.

pip install 'plugbear[fastapi]'

For other setups, simply use the command below.

pip install plugbear

Get PlugBear API Key

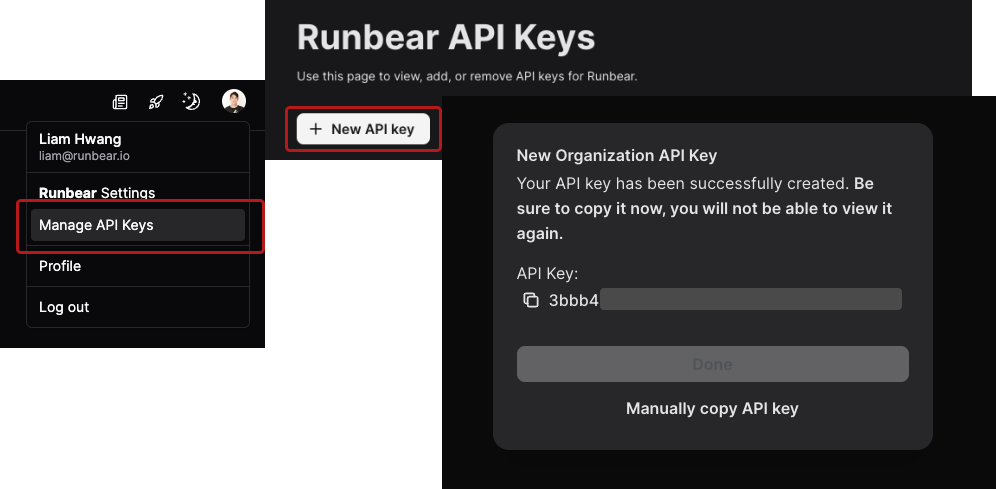

You can get your API key from the Profile menu on the top right. Click "Manage API Keys" and then "New API Key". Copy the generated key.

Initialize PlugBear SDK and Set Up Endpoint

Here's an example of how to initialize the SDK and set up the endpoint path that PlugBear will send requests to. Replace the PLUGBEAR_API_KEY with your copied API key. You can also change the endpoint path to whatever you want.

import contextlib

from openai import OpenAI

from fastapi import FastAPI

import plugbear.fastapi

# Replace with your copied PlugBear API Key

PLUGBEAR_API_KEY = "PLUGBEAR_API_KEY"

# Endpoint path for PlugBear requests

PLUGBEAR_ENDPOINT = "/plugbear"

@contextlib.asynccontextmanager

async def lifespan(app: FastAPI):

await plugbear.fastapi.register(

app,

llm_func=some_llm,

api_key=PLUGBEAR_API_KEY,

endpoint=PLUGBEAR_ENDPOINT,

)

yield

app = FastAPI(lifespan=lifespan)

client = OpenAI()

async def openai(context: plugbear.fastapi.Request) -> str:

chat_completion = client.chat.completions.create(

model='gpt-4-1106-preview',

messages=[{"role": msg.role, "content": msg.content}

for msg in context.messages],

)

return chat_completion.choices[0].message.content

For more examples, refer to our examples on GitHub

Step 2. Configure Your App in PlugBear

- Navigate to the "LLM apps" menu and click "Add App".

- Select "PlugBear Python SDK" as your app type.

- In the "Your LLM App Endpoint" field, enter the endpoint with the path you set earlier.

Step 3. Connect to Slack or Discord and Interact with Your LLM App

- Go to the 'Connections' page in PlugBear.

- Click 'New Connection'.

- Choose your Slack workspace or Discord server, select the app, and click 'Create'.

- Start interacting with

@PlugBearin your chosen platform to test functionality.

You're all set! Now, engage with your custom LLM App from Slack or Discord. For more details, refer to our example code and SDK documentation.